Data annotation is the backbone of AI development, ensuring that AI models can effectively learn from data and produce accurate outcomes.

However, without proper quality control in data annotation, the accuracy, integrity, and fairness of AI models can significantly suffer.

This blog explores why data annotation quality control is crucial to AI accuracy and best practices to maintain high-quality annotation standards.

Why is Quality Control Essential in Data Annotation?

Quality control in data annotation directly impacts AI model performance. Inaccurate annotations can lead to faulty AI predictions, which can be especially dangerous in high-stakes fields such as healthcare or autonomous driving.

For instance, biased or erroneous data can skew results, making AI systems unreliable in real-world applications.

A robust quality control process ensures that annotations are consistent, error-free, and relevant to the task at hand.

Consistent annotations help AI models generalize better, allowing them to perform accurately even with unseen data.

Maintaining annotation quality also prevents model degradation over time due to data drift or anomalies, which can occur as real-world data evolves.

How to Improve Data Annotation Quality

Improving data annotation quality starts with clear guidelines and consistent training for annotators. A few essential techniques include:

- Multiple Annotators and Consensus-Building: Using multiple annotators and calculating inter-annotator agreement (metrics like Fleiss’ Kappa) ensures consistency in labeling, reducing human error.

- Regular Audits and Reviews: Ongoing audits and reviewing samples of annotated data help detect and correct any inconsistencies before they can significantly impact the model.

- Advanced Tools and Automation: Leveraging AI tools for pre-annotation can boost efficiency. While human annotators focus on refining the more complex cases, automated tools handle routine tasks.

By combining human expertise with AI-based tools, organizations can streamline the annotation process while ensuring quality and reducing manual effort.

The Impact of Poor Data Annotation Quality in AI

Poor-quality annotations can have wide-reaching consequences.

For example, biased data in healthcare AI could lead to incorrect diagnoses, disproportionately affecting specific demographic groups.

Similarly, for autonomous vehicles, improper annotation of objects like pedestrians can result in dangerous situations.

In real-world cases, biased or inaccurate annotations have already caused significant problems.

For instance, facial recognition AI has been criticized for its higher error rates when identifying people of color, a result of biased training data.

These cases emphasize why quality control in data annotation is not just a technical requirement but an ethical one.

Best Practices for Maintaining Data Annotation Quality

Maintaining data annotation quality requires continuous improvement. Some best practices include:

- Inter-Annotator Agreement Metrics: Use statistical methods such as Cohen’s Kappa to measure label consistency across different annotators. This ensures that even subjective data labeling tasks maintain high reliability.

- Diverse Datasets: To avoid bias, it is essential to include a wide range of data types and demographics, ensuring the model learns from a balanced and representative dataset.

- Continuous Training and Feedback: Annotators should be retrained periodically, with feedback loops built into the process to address any recurring issues or edge cases.

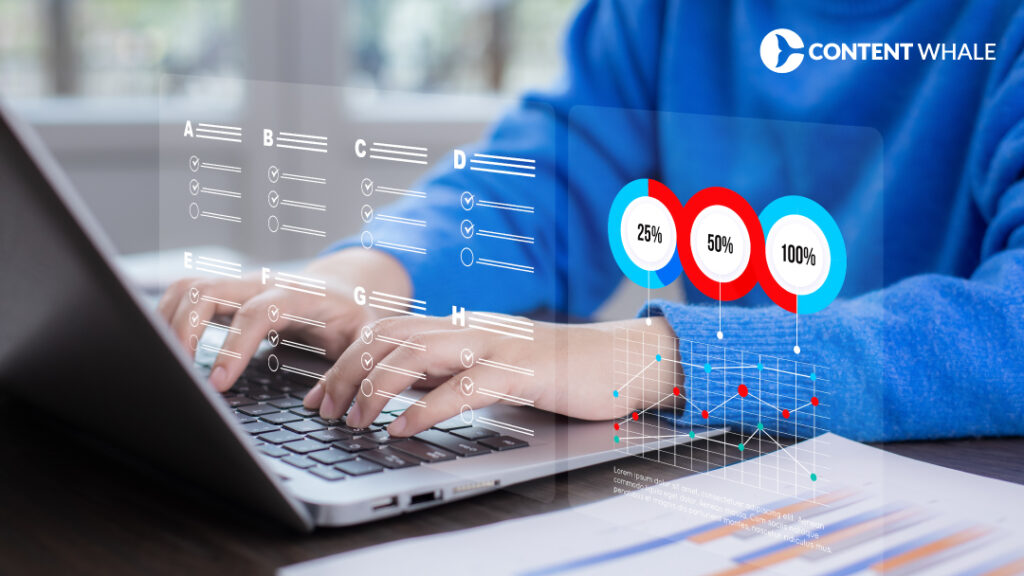

| # | Best Practice | Description | Benefits |

| 1 | Clear Guidelines | Develop and provide comprehensive annotation guidelines. | Ensures uniformity and clarity in tasks. |

| 2 | Annotator Training | Conduct thorough training sessions for annotators. | Reduces errors and increases confidence in tasks. |

| 3 | Quality Assurance Checks | Implement regular reviews and audits of annotated data. | Maintains high standards and catches mistakes early. |

| 4 | Feedback Loops | Create systems for ongoing feedback to annotators. | Encourages improvement and engagement. |

| 5 | Use of Multiple Annotators | Utilize multiple annotators for each task to ensure consistency. | Minimizes bias and enhances reliability. |

| 6 | Annotation Tools and Technology | Invest in reliable and efficient annotation tools. | Improves speed and accuracy of annotations. |

| 7 | Continuous Improvement | Regularly update guidelines and practices based on feedback. | Keeps the process dynamic and responsive. |

| 8 | Performance Metrics | Establish metrics for assessing annotation quality. | Provides objective measures for evaluation. |

| 9 | Collaborative Annotation | Foster collaboration among annotators for sharing best practices. | Builds community and enhances quality. |

| 10 | Documentation | Maintain detailed records of processes and changes. | Ensures consistency and aids training. |

Conclusion

Ensuring high-quality data annotation is not just about improving AI model performance—it is also about ensuring ethical, reliable, and fair AI systems.

Organizations must adopt a multi-pronged approach that includes advanced tools, continuous audits, and robust training for annotators to mitigate risks and maintain the highest standards in AI development.

By following the outlined strategies, companies can improve AI accuracy while ensuring their models remain trustworthy and unbiased.

Looking to ensure your AI systems perform at their best in 2024? At Content Whale, we specialize in data annotation quality control customized to your specific needs. From meticulous labeling to comprehensive bias audits, we ensure your AI models are trained on high-quality data. Let us help you achieve unparalleled accuracy and reliability. Contact us today for more information!

FAQs

1. Why is data annotation quality control essential for AI?

Data annotation quality control in AI is crucial because the success of an AI model heavily depends on the quality of the annotated data it is trained on. If the data is inconsistent, poorly labeled, or biased, the AI model’s predictions will reflect these shortcomings, resulting in unreliable and skewed outputs. High-quality annotation ensures AI data quality, leading to improved model performance and more accurate decision-making.

Moreover, quality control prevents issues like data drift and labeling inconsistencies, which can occur over time, especially in dynamic environments where data changes frequently. Regular quality checks ensure that the AI remains effective and adaptable to new data inputs. By maintaining data labeling accuracy through rigorous quality control, organizations can achieve better results across applications like autonomous driving, healthcare diagnostics, and fraud detection in finance.

2. How do annotation errors impact AI model performance?

Annotation errors can significantly degrade AI model performance, as they introduce bias and inaccuracies into the training process. For example, in medical AI applications, incorrectly labeled data could result in misdiagnoses, potentially endangering patients’ lives. Similarly, in natural language processing (NLP), poorly annotated text can lead to misunderstandings in sentiment analysis, making chatbots or customer service applications ineffective.

Such errors can also reduce AI data integrity, causing the AI model to generalize poorly when faced with new, unseen data. To mitigate these risks, it is essential to maintain machine learning quality control by implementing strict quality checks, including inter-annotator agreement metrics and automated error detection tools. These practices ensure that data labeling accuracy is upheld, minimizing the negative effects of human error.

3. What are the best techniques to ensure high-quality data annotation?

Ensuring high-quality data annotation requires a combination of human expertise and automated tools. Some of the best techniques include:

- Clear Annotation Guidelines: Well-documented guidelines ensure consistency across annotators, reducing ambiguity and errors. Guidelines should cover edge cases, label definitions, and examples of correct annotation.

- Multiple Annotators: Using multiple annotators for the same data and calculating inter-annotator agreement (e.g., Fleiss’ Kappa) helps achieve data labeling accuracy and reduces subjectivity.

- Regular Audits and Spot Checks: Conducting periodic reviews of annotated data helps catch errors early and ensure AI data integrity. Audits should be integrated into the annotation process to prevent long-term quality degradation.

- Automated Pre-Annotation Tools: Leveraging AI to pre-annotate data can increase efficiency and reduce human error. Human annotators can then focus on correcting and refining the annotations, maintaining high machine learning quality control.

- Continuous Feedback Loops: Regular feedback to annotators helps in improving their performance over time, ensuring that data annotation quality control is continuously optimized.

4. How does automation help in maintaining annotation quality?

Automation plays a significant role in maintaining data annotation quality control by speeding up the process and reducing human errors. Automated tools, such as deep learning algorithms, can perform pre-annotation tasks, labeling large volumes of data quickly. This reduces the manual workload and allows human annotators to focus on refining the data where necessary.

Moreover, automation can assist in identifying annotation errors by flagging inconsistencies across large datasets. For example, AI-driven quality assurance systems can detect when two annotators provide conflicting labels and flag this for review. Automation not only boosts efficiency but also ensures that the AI data quality is consistent across different data types, from text to images and video annotations. This reduces costs while maintaining a high level of data labeling accuracy and AI data integrity.

5. Can data annotation quality control prevent bias in AI models?

Yes, proper data annotation quality control is one of the most effective ways to prevent bias in AI models. Bias in AI often arises from imbalanced or improperly labeled training data. For example, if an AI system used in hiring is trained on a dataset that contains gender-biased annotations, it may perpetuate these biases in its predictions.

Quality control can mitigate this by ensuring that datasets are diverse and representative of all relevant demographics. Techniques like regular audits, multiple annotators, and consensus-building help eliminate annotation errors that might introduce bias. Additionally, using diverse teams of annotators can reduce the chances of unconscious bias affecting the data labeling process.

Maintaining AI data integrity and ensuring that data is labeled consistently and fairly across different demographic groups allows AI models to produce more equitable outcomes. Organizations must also monitor for biases throughout the development lifecycle, refining both the datasets and the annotation processes to ensure fairness in their AI applications.